Deploying Kubernetes with Kubeadm and Calico - Part 2

IN PROGRESS

This post is part 2 of a series that details the steps necessary to deploy a Kubernetes cluster with Kubeadm and Calico.

I recently started studying for the Certified Kubernetes Administrator Exam, and I thought writing this post would be helpful for me and for any others who are interested in learning about Kubernetes.

In Part 2, we will go through the specific installation processes on our controller node and our worker nodes. We will complete the cluster initialization, Calico configuration and installation, a nd also test a pod deployment on our new cluster.

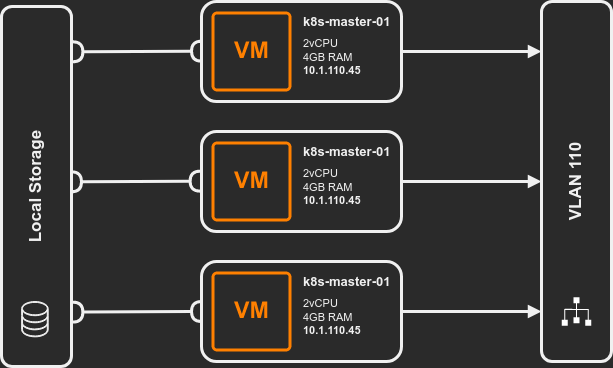

As a reference, the topology of the kubernetes lab is posted below:

Controller Nodes

Installing Kubernetes

Now we install the Kubernetes components. Since this is a controller node, we will need to deploy the following components:

kubeletkubeadminkubectl

The following command will update the apt cache and install our components.

|

|

Once the installation is complete, we need to tell apt to flag the packages so they won’t be upgraded automatically.

|

|

Configure Kubernetes

Let’s configure the Kubernetes cluster.

Kubeadm Details

We will be initializing the cluster with kubeadm

The tool kubeadm is designed to create a minimally viable cluster, ready to accept worker nodes.

I’ve documented the majority of the high-level steps it will take below.

For more detailed information regarding the kubeadm init phase, please the following link:

kubeadm - implementation details

preflight checks Images will be pulled if none are available locally, and checks are run, such as what kernel is running, if the user is not root, swap is on, etc.

Generate Certificates All necessary certificates to secure communication in the cluster are generated.

Generate

kubeconfigfiles for control plane components This process will create all of thekubeconfigfiles that will be used during the build of the individual control plane components, like the controller-manager and the Scheduler.Generate Static Pod Manifests This process generates the pod configuration files for services like API server, Controller manager, and Scheduler.

Configure TLS-Bootstrapping for node joining This process will set up the necessary components to support nodes joining the cluster with a token.

Again, this was a very high-level and incomplete look at the

kubeadm initphase. I highly suggest reviewing the documentation around this process, as it is rather complex.

When we use kubeadm init, we will be passing the --pod-network-cidr argument, which will identify

the network subnet we want to enable our pods to use. Initially, this network is not directly

exposed to other networks and will be used primarily for pods to communicate.

--pod-network-cidr

This is the network that the pods will be using.

|

|

Once Kubernetes has initialized, you should have the following message:

|

|

About the discovery token The initial discovery token will only be valid for 24 hours from the time of creation. You can create new tokens with the following command

1sudo kubeadm token create --print-join-commandthis command will output a new token that can be used to join the cluster.

Take Note of the whole kubeadm join command, as we will be using it later for our nodes to

join the cluster.

Post-Configuration Steps

We need to do a few housekeeping steps before joining our worker nodes in our cluster.

As noted in the message from our setup process, we should run a few commands to start using our cluster:

|

|

To validate our configuration worked, we can run kubectl get nodes -o wide, which should output

node data for us to see.

|

|

Now we can finish up our work on the controller node by adding our networking and network policy components.

For the purposes of my lab, I will be deploying the Calico operator and manifests. For more details around Calico, I suggest checking into the documentation available here: Calico Documentation

Calico Operator and manifests These components will be responsible for managing the general lifecycle of our Calico cluster and deploying the networking components.

Install the operator and manifests on your cluster:

|

|

This command will create the deployment of Calico in our cluster.

Once this has been completed, we can verify the deployment shows in Kubernetes with kubectl get deployment:

|

|

We can also watch the deployment to confirm that the pods are running.

|

|

Install calicoctl

To manage and perform administrative functions on Calico, we will need calicoctl

This will also allow us to see the detailed cluster status of Calico.

For more details on calicoctl see the following link: calicoctl

We will be installing calicoctl as a kubectl plugin which will allow us to call calicoctl

from within kubectl.

On the controller node, we will download the calicoctl binary and set the file to be executable,

and move it to the /usr/bin

|

|

We can test calicoctl by running the following command:

|

|

Kubectl Command Completion

One final task we will complete on our controller node is to enable the kubectl command completion.

I will assume you are using Ubuntu 20 like I am in my lab. Still, if you are not, I’d recommend looking at the Kubernetes documentation regarding bash-completion: kubectl - auto-completion

To verify that the kubectl command completion is not configured, you can start by typing kubectl in your console and pressing the tab key several times. If no kubectl specific commands show up, the completion script has not been loaded.

Before we configure, we should check if we have the bash-completion package installed. We can verify by running sudo apt-get install bash-completion. If the package is not installed, it will request permission to install.

|

|

We need to enable kubectl auto-completion by ensuring the kubectl completion script is loaded in all of our shell sessions when interacting with the controller node. We can do that by running the following command.

|

|

After running the above command, refresh your session by logging back in.

You should now be able to tab-complete commands in kubectl.

Worker Nodes

We first need to make sure our general node prerequisites have been met on our worker nodes, and then we can begin the worker-node-related setup.

Prerequsites

First, we will need to install our Kubernetes components. We will need to install both kubelet and

kubeadm on our worker nodes. We can accomplish this with the following command:

|

|

Joining Worker Nodes

Now that we have our prerequisites completed, we can run our kubeadm join command to add our node to the cluster.

Note! You will need to have a generated

kubeadm joincommand with the cluster-specific configuration detail to join your worker nodes to the cluster.

|

|

After this has been completed, you should now be able to check the node status on the controller node. Run the following command to verify the worker node has joined the cluster.

|

|